- Foreword

- Preface

- Simple Linear Regression—Description

- Ordinary Least Squares (OLS) Estimation

- Correlation and Standardized Regression

- Coefficient-Level Inference

- Model-Level Inference

- Introduction to Multiple Regression

- Understanding Statistical Control

- Distributional Assumptions Underlying the Regression Model

- Dichotomous Categorical Predictors

- Polychotomous Categorical Predictors

- Introduction to Interaction Effects

- More Interaction Effects

- References

Simple Linear Regression—Description

In this set of notes, you will begin your foray into regression analysis. To do so, we will use the riverview.csv data to examine whether education level is related to income. The data contain five attributes collected from a random sample of city.

# Load libraries

library(dplyr)

library(ggplot2)

library(readr)

# Read in data

city = read_csv(file = "https://raw.githubusercontent.com/zief0002/modeling/master/data/riverview.csv")

head(city)# A tibble: 6 × 5

education income seniority gender party

<dbl> <dbl> <dbl> <chr> <chr>

1 8 26.4 9 female Independent

2 8 37.4 7 Not female Democrat

3 10 34.2 16 female Independent

4 10 25.5 1 female Republican

5 10 47.0 14 Not female Democrat

6 12 46.5 11 female Democrat Data Exploration

Any analysis should start with an initial exploration of the data. During this exploration, you should examine each of the variables that you will be including in the regression analysis. This will help you understand results you get in later analyses, and will also help foreshadow potential problems with the analysis. This blog post describes initial ideas of data exploration reasonably well. You could also refer to almost any introductory statistics text for additional detail.

It is typical to begin by exploring the distribution of each variable used in the analysis separately. These distributions are referred to as marginal distributions. After that, it is appropriate to explore the relationships between the variables.

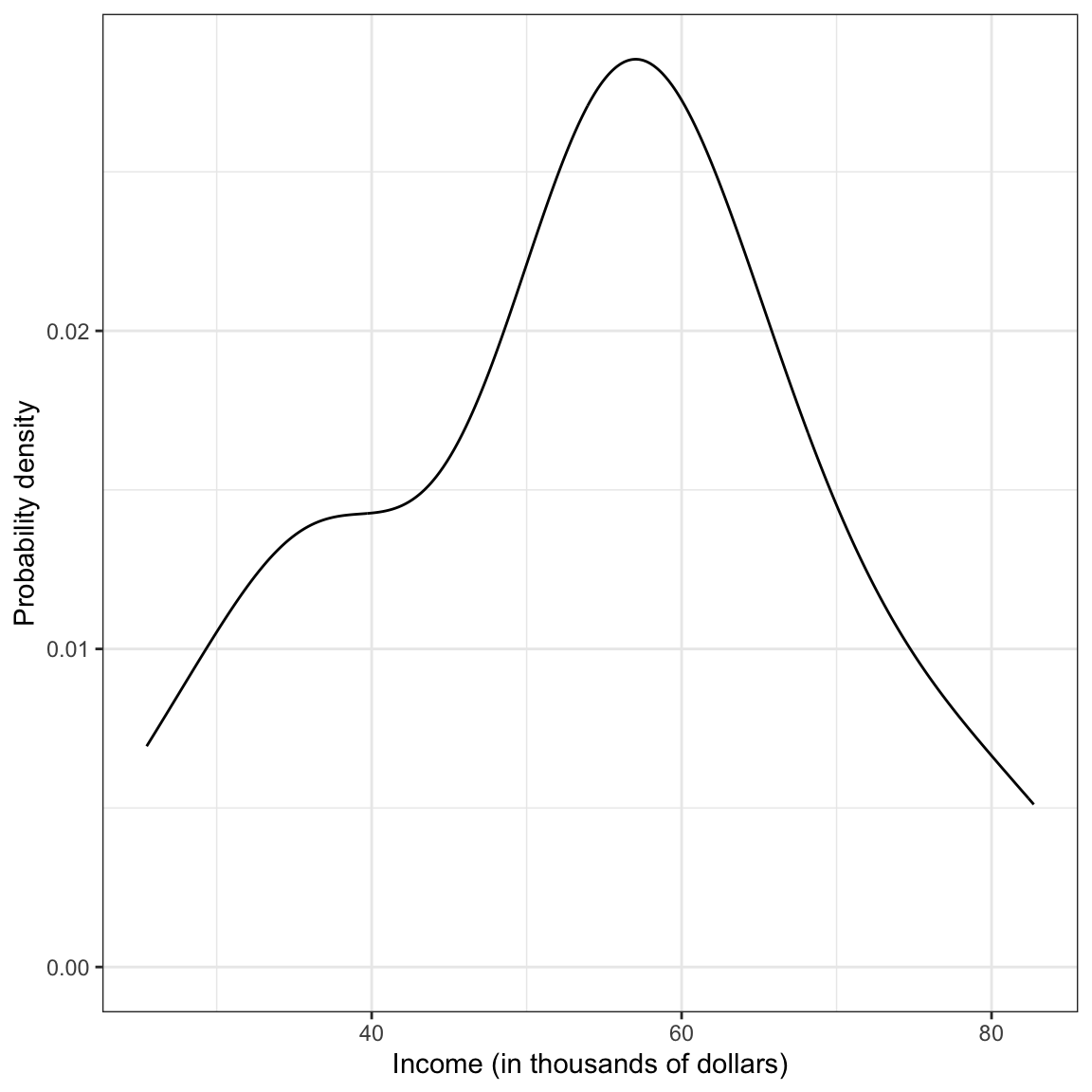

Marginal Distribution of Income

To begin this exploration, we will examine the marginal distribution of employee incomes. We can plot a marginal distribution using functionality from the ggplot2 package.

ggplot(data = city, aes(x = income)) +

stat_density(geom = "line") +

theme_bw() +

xlab("Income (in thousands of dollars)") +

ylab("Probability density")

Figure 1: Density plot of employee incomes.

This plot suggests that the distribution of employee incomes is unimodal and most of the incomes are between roughly $50,000 and $70,000. The smallest income in the sample is about $25,000 and the largest income is over $80,000. This indicates there is a fair amount of variation in the data.

To further summarize the distribution, it is typical to compute and report summary statistics such as the mean and standard deviation. One way to compute these values is to use functions from the dplyr library.

city %>%

summarize(

M = mean(income),

SD = sd(income)

)# A tibble: 1 × 2

M SD

<dbl> <dbl>

1 53.7 14.6Describing this variable we might write,

The marginal distribution of income is unimodal with a mean of approximately $53,700. There is variation in employees’ salaries (SD = $14,500).

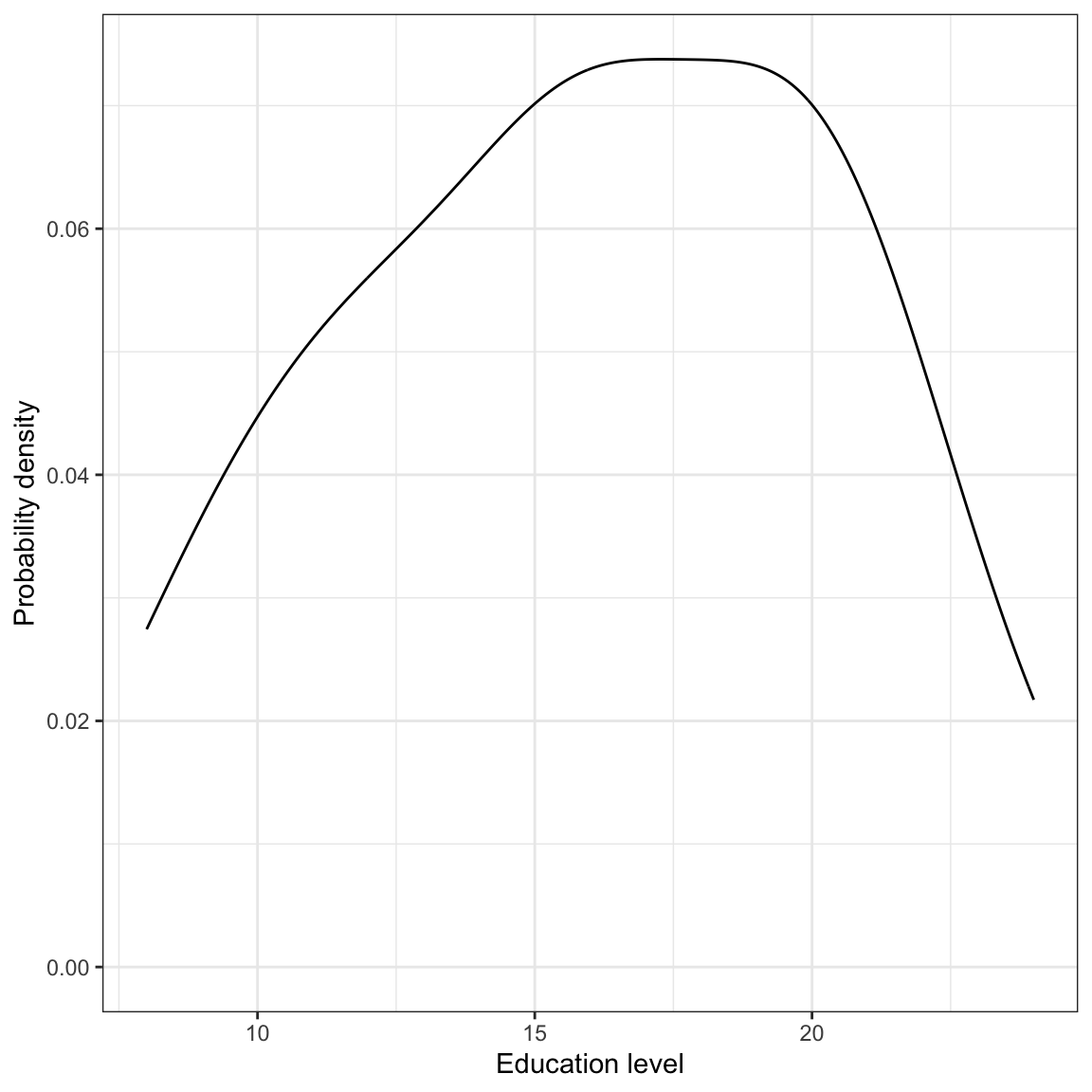

Marginal Distribution of Education Level

We will also examine the marginal distribution of the education level variable.

# Plot

ggplot(data = city, aes(x = education)) +

stat_density(geom = "line") +

theme_bw() +

xlab("Education level") +

ylab("Probability density")

Figure 2: Density plot of employee education levels.

# Summary statistics

city %>%

summarize(

M = mean(education),

SD = sd(education)

)# A tibble: 1 × 2

M SD

<dbl> <dbl>

1 16 4.36Again, we might write,

The marginal distribution of education is unimodal with a mean of 16 years. There is variation in employees’ level of education (SD = 4.4).

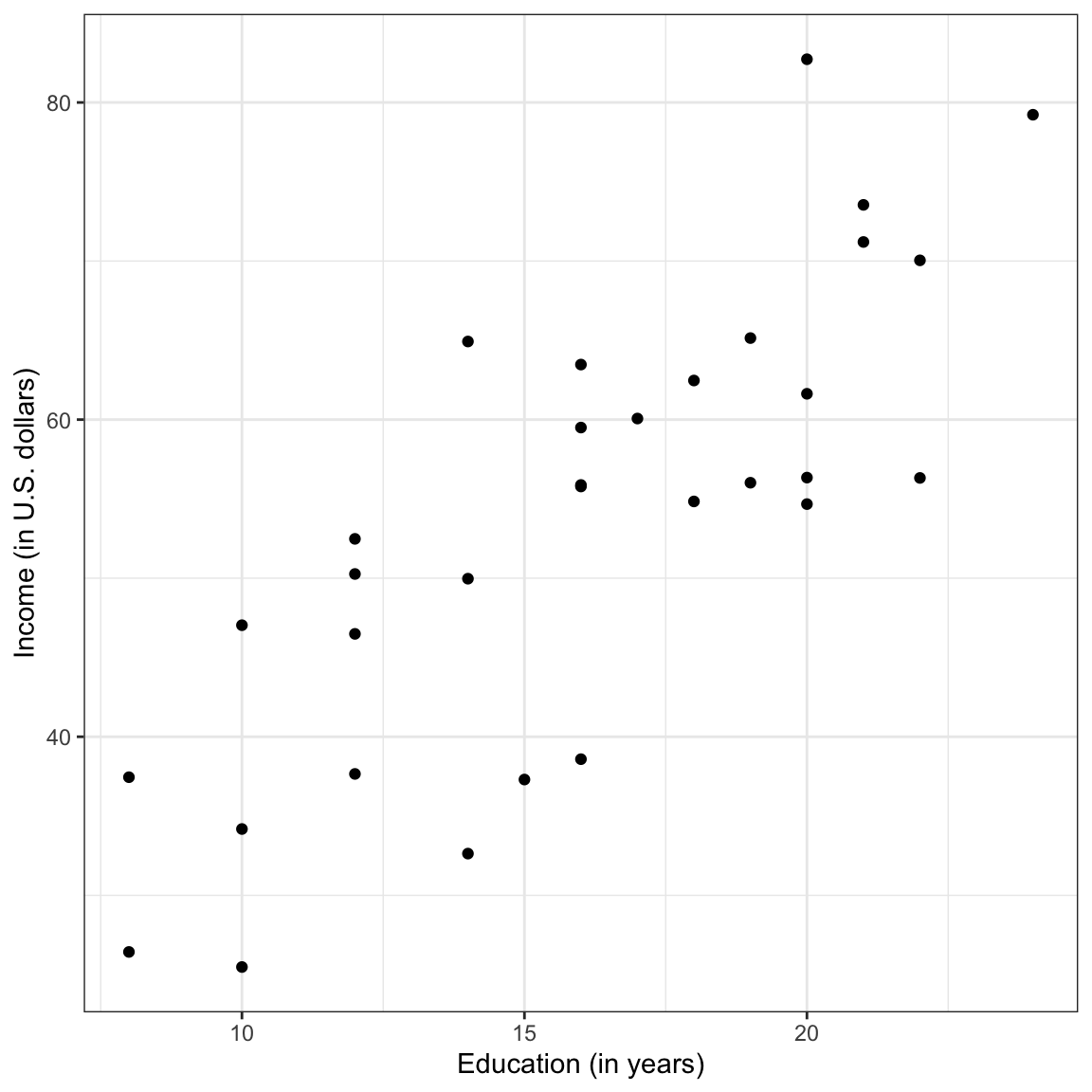

Relationship Between Variables

Although examining the marginal distributions is an important first step, those descriptions do not help us directly answer our research question. To better understand any relationship between income and education level we need to explore how the distribution of income differs as a function of education. To do this, we will create a scatterplot of incomes versus education.

ggplot(data = city, aes(x = education, y = income)) +

geom_point() +

theme_bw() +

xlab("Education (in years)") +

ylab("Income (in U.S. dollars)")

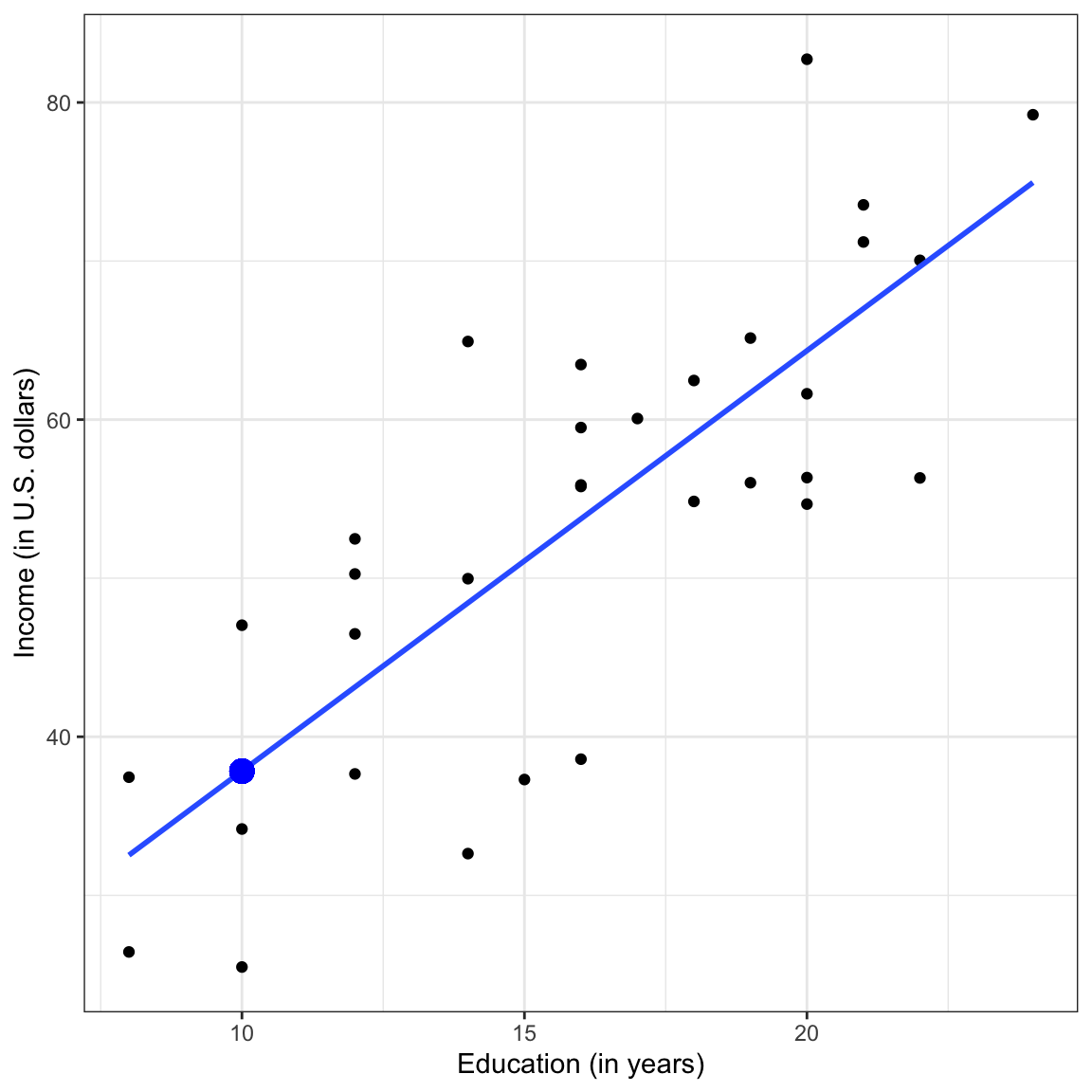

Figure 3: Scatterplot displaying the relationship between employee education levels and incomes.

The plot suggests a relationship (at least for these employees) between level of education and income. When describing the relationship we want to touch on four characteristics of the relationship:

- Functional form of the relationship

- Direction

- Strength

- Observations that do not fit the trend (outliers)

Statistical Model: Mathematical Description of the Data

Since the relationship’s functional form seems reasonably linear, we will use a linear model to describe the data. We can express this model mathematically as,

In this equation,

The linear statistical model (i.e., the regression model) can be separated into two components: a systematic (or fixed) component and a random (or stochastic) component.

Systematic Part of the Statistical Model

The systematic (fixed) part of the equation gives the mean value of

Note that the systematic part of the equation does not include the error term. The error term is a part of the random component of the model. Statisticians also use

The terms

For example, the difference between the mean income for those employees who have 10 years of education and those that have 11 years of education is the same as the difference between the mean income for those employees who have 17 years of education and those that have 18 years of education. Or, the difference between the mean income for those employees who have 4 years of education and those that have 8 years of education is the same as the difference between the mean income for those employees who have 20 years of education and those that have 24 years of education.

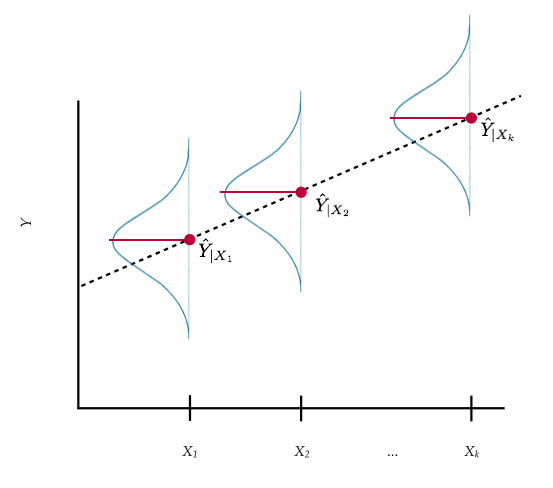

Visual Representation of the Regression Model

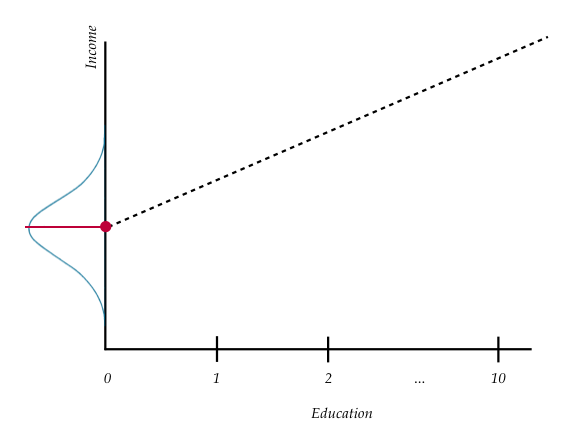

To help better understand the model, consider the following plot:

Figure 4: Plot displaying conditional distribution of

At each value of

Each conditional distribution of

Random Part of the Statistical Model

From the visual representation of the model, we can see that there is a distribution of

This equation implies that each observed

Or, using the

To compute an observation’s residual, we compute the difference between the observation’s

To further understand the residual term, consider the plot below. This figure shows the relationship between education and income we plotted earlier, and also includes the regression line.

Figure 5: Scatterplot displaying the relationship between employee education levels and incomes. The OLS fitted regression line is also displayed.

Consider the three employees that have an education level of 10 years. The conditional mean income for these three employees is approximately $37,800. This is denoted by the blue point. Remember, the conditional means are on the regression line. The error (residual) term allows for discrepancy between the conditional mean of

Graphically, the residual is represented by the vertical distance between the line and a given point on the scatterplot. Some of those points are above the line (they have a positive residual) and some are below the line (they have a negative residual). Also note that for some observations the error term is smaller than for others.

Estimating Parameters in the Regression Model

The regression model describes the relationship between

In most statistical analyses, you will use a sample of data (not the entire population) to estimate the parameter values. Because a sample is only a subset of the population, the values we obtain for the parameters are imperfect estimates. To denote that the parameters are sample-based estimates, we add a hat to each parameter we are estimating. For example, estimates of the parameter estimates of

Applied researchers and statisticians tend to focus their analysis on the systematic (fixed) part of the model, and are thus often most interested in the values of the regression coefficients. After fitting the model to data, the estimated conditional means can be expressed mathematically as:

Or, using the notation

We use the hats when we are indicating sample-based estimates of these values, so hats should be used when you are reporting the values obtained after using a sample of data to obtain the values.2 The two estimated parameters of interest here (

Estimating Residuals

Note that we can also use the estimated regression coefficients to obtain estimates for the residuals, often referred to as the observed residuals. Here we make use of the earlier idea that the residual term was the difference between the observed value of

Once we use data to obtain estimates for the intercept and slope (

Or, using the “Y-hat” notation,

Remember, the hat on the residual indicates it is an estimate based on values obtained from the data!

Fitting a Regression Model to Data Using R

To fit the regression model to data using R, we will use the lm() function. The syntax for this function looks like this:

lm(outcome ~1 +predictor,data =dataframe)

where outcome is the name of the outcome/response variable, predictor is the name of the predictor variable, and dataframe is the name of the data frame. (The 1 on the right side of the tilde tells R to include the intercept in its computation.) When we fit a regression model in R, we will also assign the output to a new object in R. Below, we fit the model using education level to predict income. Here the output is assigned to an object called lm.a. We can print the regression parameter estimates by typing the lm() object name and hitting enter.

# Fit regression model

lm.a = lm(income ~ 1 + education, data = city)

# Print regression coefficients

lm.a

Call:

lm(formula = income ~ 1 + education, data = city)

Coefficients:

(Intercept) education

11.321 2.651 Here the parameter estimates (or regression coefficients) are:

Remember that these are estimates and need the hats. The systematic part of the fitted regression model (or fitted equation) is:

Intercept Interpretation

The estimate for the intercept was 11.321. Graphically, this value indicates the value where the line passes through the

To interpret this value, remember that

Figure 6: Plot displaying conditional distribution of

Interpreting the intercept coefficient from our example,

The model predicted mean income for all employees that have an education level of 0 years is $11,321.

Slope Interpretation

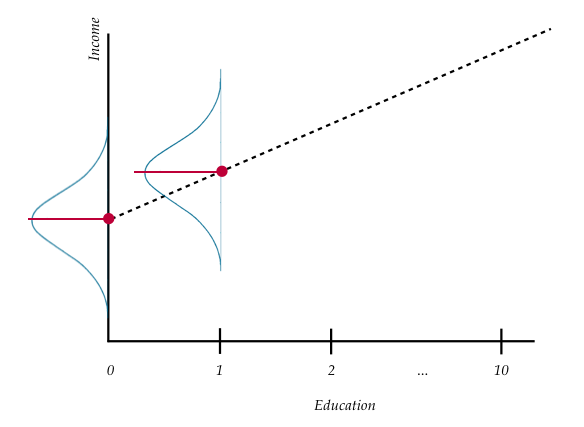

Recall from algebra that the slope of a line describes the change in

Again, because

Figure 7: Plot displaying conditional distribution of

Interpreting the slope coefficient in our example,

Each one-year difference in education level is associated with a model predicted difference of $2,651 in mean income.

To better understand this, consider the mean income for city employees with three different education levels. The first set of employees have an education level of 10 years. The second set have an education level of 11 years, and the third set have an education level of 12 years. Now let’s compute each set of employees’ mean income using the estimated regression coefficients.

Each set of employees’ education levels differ by one year (10 to 11 to 12). The difference in predicted mean incomes for these employees differs by 2.651 thousand dollars.

Estimating Residuals

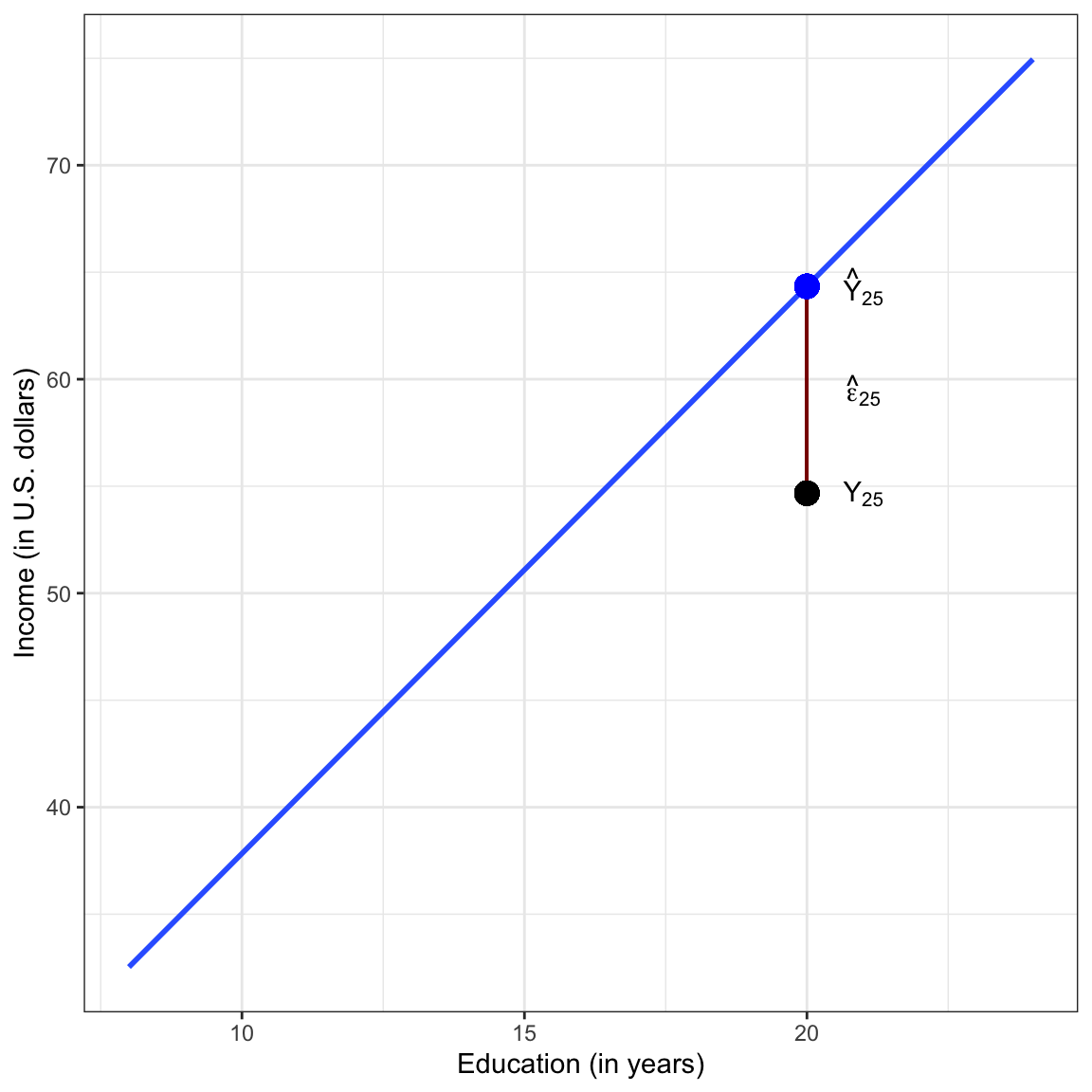

Consider the 25th case in the data frame.

city %>%

filter(row_number() == 25)# A tibble: 1 × 5

education income seniority gender party

<dbl> <dbl> <dbl> <chr> <chr>

1 20 54.7 12 female IndependentThis employee (Employee 25) has an education level of 20 years (

# Y_hat = b0 + b1 * X

11.321 + 2.651 * 20[1] 64.341We could also report this using the conditional mean notation,

Now we can use the estimated conditional mean to also compute Employee 25’s residual.

# e = Y - Y_hat

54.672 - 64.341[1] -9.669Using mathematical notation,

The negative residual,

Figure 8: Plot displaying the OLS fitted regression line (blue) between employee education levels and incomes. The 25th employee’s observed data (black dot) is plotted, and a visual representation of the employee’s residual (red line) is also displayed.

Answering the Research Question

Remember that this whole analysis was driven because we wanted to answer a question, namely whether education level is related to income for the Riverview employees. The results from the regression analysis allows us to answer this question.

To answer the question of whether education level is related to income, a linear regression model was fitted to the data using ordinary least squares estimation. The results of this analysis suggested that education level is positively related to income for the 32 employees (

). Each year of education is associated with a $2,651 difference in income, on average.

Here the regression analysis provides a quantitative summary of the relationship between education level and income. It provides us information about the direction of the relationship (positive) and the magnitude of that relationship. Although this can give us a description of the realtionship, it is only for the sample of data you looked at (i.e., for these 32 employees). To make further statements about whether there is a relationship between education level and income in a broader popualtion (e.g., all Riverview employees, or all California residents), we need more information, namely whether the sample is representative of the larger population and also statistical information about the amount of sampling error we have. (We will cover sampling error in Chapter 5.)

Technically there are many unknown residuals, one for each case, but the assumptions we put on the linear model make it so that we only care about the variance of the residuals, hence a single unknown.↩︎

Sometimes people use Roman letters when referring to sample estimates rather than hatting the Greek letters. For example, the sample-based equation might be denoted: